Google researchers share study showing how AI can be used to turn brain scans into music

The “Brain2Music” project between Google and Osaka University could be the first steps towards generating music directly from thoughts

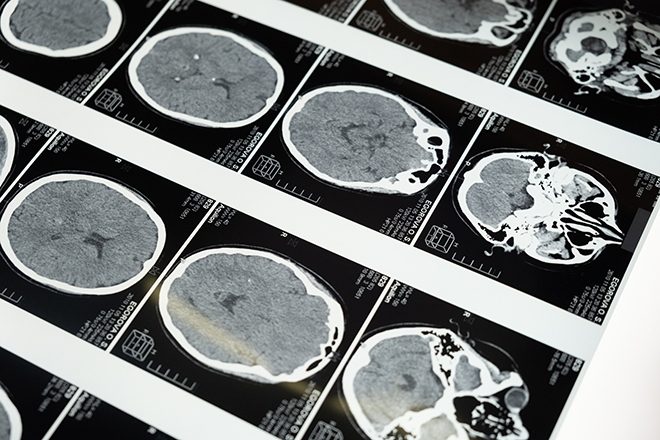

Google and Osaka University in Japan have published research demonstrating an interesting technique for turning brain activity into music.

Five volunteers were placed inside an fMRI [functional magnetic resonance imaging] scanner and played over 500 tracks across 10 different musical styles. The brain activity created images that were captured and embedded into Google's AI music generator, Music LM, which meant that the software was conditioned on the brain patterns and responses from the individuals.

The music created was similar to the stimuli the subjects received, with subtle semantic variances. The report’s abstract says that “The generated music resembles the musical stimuli that human subjects experienced, with respect to semantic properties like genre, instrumentation, and mood.”

Read this next: 73% of producers believe AI could replace them, according to new data

The study also found that there is overlapping in the brain regions responsible for “representing information derived from text and music overlap”, noting similarities between two MusicLM components and brain activity in the auditory cortex.

The researchers findings, in summary, said that “When a human and MusicLM are exposed to the same music, the internal representations of MusicLM are correlated with brain activity in certain regions.”

It continued that “When we use data from such regions as an input for MusicLM, we can predict and reconstruct the kinds of music which the human subject was exposed to.”

Though It is thought that such research could bring us closer to translating thoughts into music via some sort of hyper-intelligent software, the paper noted that building a universal model would be difficult since brain activity greatly differs from one person to the next. The next step would be to find a way to make music purely from human imagination, rather than inputted stimulus.

Check out the full paper here, where you can also listen to the AI generated music.

Tiffanie Ibe is Mixmag’s Digital Intern, follow her on Instagram

Mixmag will use the information you provide to send you the Mixmag newsletter using Mailchimp as our marketing platform. You can change your mind at any time by clicking the unsubscribe link in the footer of any email you receive from us. By clicking sign me up you agree that we may process your information in accordance with our privacy policy. Learn more about Mailchimp's privacy practices here.

Bugged Out Weekender to return to Bognor Regis in 2026

Bugged Out Weekender to return to Bognor Regis in 2026